Date & Location: April 9, 2025, Umeå University, Västerbotten, Sweden

The workshop was organised by the AI Policy Lab in collaboration with the AI Technologies for Sustainable Public Service Co-creation (AICOSERV) project members.

Overview

The workshop brought together more than 50 stakeholders from the public and private sectors, as well as academia, to explore the relationship between barriers to AI adoption in public services and the skills and expertise required to overcome them.

A central theme of the workshop was the “question zero”, the fundamental inquiry of whether AI should be used at all in a given context. As AI technologies continue to advance and expand into complex public sector tasks, the assumption that AI is always the right or necessary solution must be critically examined. The workshop challenged participants to consider not only how AI can be implemented, but to question whether it should be, emphasizing that responsible adoption begins with questioning the appropriateness and desirability of AI in specific domains.

This foundational concern set the tone for broader discussions about trust, governance, transparency, and the skillsets required to navigate the opportunities and risks of AI in public service.

Keynote Address

Professor Virginia Dignum, Director of the AI Policy Lab, opened the workshop with a keynote titled “Governing AI: Why, What, How?”

She addressed the societal and governance implications of AI, focusing on the need to critically evaluate when and how AI should be integrated into public service contexts. Her talk stressed the importance of not overlooking ethical, legal, and operational challenges in the rush to adopt AI.

Regional Case Study: AI in Västerbotten

Considering the wide range of public services open to AI adoption, a recurring set of challenges consistently emerges. Whether deploying AI-driven diagnostic tools in healthcare or implementing predictive analytics within smart city infrastructures, public and private sector actors, and community stakeholders face diverse barriers. Henry Lopez-Vega, fellow at the AI Policy Lab, presented on the challenges of AI adoption in the Västerbotten region in his session titled “What are the challenges with AI (in Västerbotten)?”

His research identified three core barriers to building a responsible AI ecosystem:

- Technological infrastructure and processes within organisations

- Organisational culture and resistance to change

- Lack of clarity around AI governance and ownership

Group Discussions: Skills and Stakeholder Engagement

In the second half of the workshop, participants engaged in group discussions focusing on organisational challenges, key stakeholders, and barriers to implementation. Each group then mapped the skills and knowledge needed for responsible AI adoption in their contexts.

For example, in the case of AI-supported recruitment processes, participants identified several critical barriers:

- Lack of transparency in AI decision-making

- Biases in training data

- Limited legal and ethical guidelines for automated hiring

To address these issues, participants emphasized the need for professionals with a blend of competences, including:

- Knowledge of data protection and anti-discrimination legislation

- Skills in evaluating and auditing AI systems

- Awareness of ethical considerations in algorithmic decision-making

Findings and Framework

The increasing efforts to implement AI across various public sector domains have led to a critical question: what types of professionals, equipped with what specific skills and competences, should lead the integration of responsible AI? Defining the essential set of skills, knowledge, and professional competences required for the effective and ethical deployment of AI in both public and private sector services becomes a key priority.

A key outcome of the workshop was the identification of a recurring set of challenges affecting. These include:

- Low levels of trust

- Lack of transparency

- Unclear ownership and responsibility

- Insufficient stakeholder awareness

- Limited AI literacy and governance skills

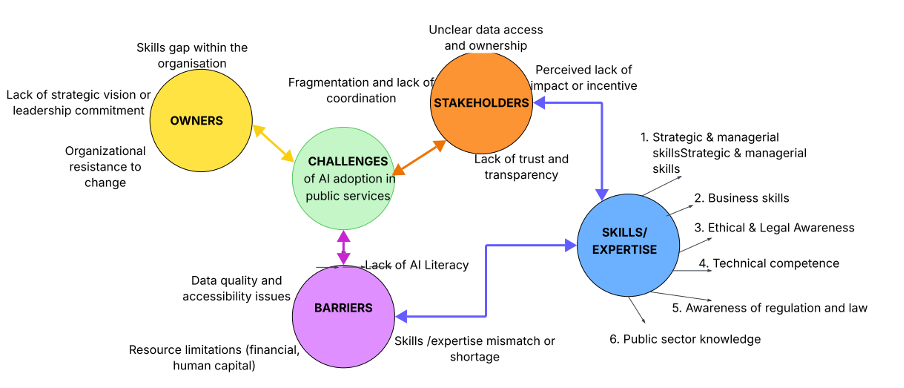

To address these challenges, we propose the conceptual framework depicted in Figure 1. This framework highlights the urgent need to cultivate professionals who combine technical expertise, ethical sensitivity, and domain-specific knowledge to lead responsible AI integration. It maps the interconnected layers that influence the deployment and responsible use of AI technologies in public services, including:

- Contextual Challenges – such as low trust, resistance to change, and limited organisational readiness

- Structural Barriers – including unclear project ownership, inadequate governance frameworks, and insufficient infrastructure

- Skill and Knowledge Gaps – highlighting the lack of AI literacy, ethical awareness, and domain-specific competences

- Stakeholder Roles – outlining the importance of identifying and engaging relevant actors (e.g. policymakers, IT professionals, legal advisors, and citizens) throughout the AI lifecycle

This framework is intended to guide structured reflection and planning around AI deployment, helping ensure that technologies are not only functional but also trustworthy, inclusive, and aligned with public values. As such, it can serve as a practical tool for organisations seeking to integrate AI technologies responsibly. It encourages a systemic perspective, one that moves beyond technical feasibility to consider broader organisational, social, and ethical dimensions.

By applying this framework, decision-makers and project leads can:

- Identify context-specific challenges before adopting AI

- Map key stakeholders and clarify roles and responsibilities

- Recognise skill and competence gaps that must be addressed

- Design AI initiatives that align with principles of transparency, accountability, and fairness

Figure 1. The framework summarising the workshop’s thematic discussions

In conclusion, the workshop underscored that a stakeholder-oriented, challenge-driven approach is key to enabling responsible AI adoption. By starting with specific domain needs and mapping corresponding skills and knowledge, organisations can more effectively navigate the complex landscape of AI integration.